Fun, Easy, Fan Controlled

Streamline your event by partnering with a single technology provider to enhance fan engagement, digital sponsorship, and crowd data analysis

At AMPLIFi, we are building a platform that lets anyone tap into nearby cameras to capture group memories at iconic places. Put differently, AMPLIFi installs robotic cameras in, say, sports stadiums, and then lets fans control those cameras remotely with their phone to capture stunning group photos to share. All without an app. The AMPLIFiCAM is an integration of fine hardware components, think high-accuracy industrial motors and top class camera equipment, as well as an equally fine suite of software.

AMPLIFiCAM needs to be automated, robust and accurate. These three boxes are not difficult to check in a software product. However, when hardware components are added to the mix, interesting challenges arise. Consider alarm clocks as an example. For me, using the alarm clock app on my phone is much easier than setting my traditional childhood wind-up clock. Everything appears seamless to the end user in the software-only solution, but I think we can agree throwing an alarm clock across the room, just to stop the ringing, does not qualify as a “seamless experience”.

All AMPLIFiCAM systems are designed for one purpose: deliver the perfect shot that captures each group memory. When users punch-in their seat number, a robotic head swivels to find the user, it is very robust – the system will always move to find you, be it in dust or under heavy rain. But is it accurate?

Imagine yourself at the US Open finals. There is a break and you are having a good time with your buddies. An epic AMPLIFiCAM picture can help capture the moment. You quickly pull up the web page, type in your seat number, and you see the camera point at a different place – somewhere other than your seat. That is incredibly problematic, not only because it is a bad experience, but it is also a privacy risk.

In every venue, each seat is mapped to a single position of the AMPLIFiCAM, this is a challenging process on its own. This means for every seat number, the camera must always be looking at the same spot. One would expect the AMPLIFiCAM setup to be accurate by default, after all, the entire system is locked into a bracket, and nobody should be able to touch it. Besides mechanical backlash, there are various kinds of natural incidents that can affect the positional accuracy[1] of the AMPLIFiCAM – this could be anything from high wind and heavy rain, to a fat angry pigeon.

Since fans will eventually enter the stadium and take up their seats, we cannot guarantee that a seat will always look the same to the camera. However, we can find a unique spot that we know will never change, like a gate number or decal, and use it as a reference.

This idea was inspired by “Where’s Waldo”. Waldo always has a known and unchanging look, and winning the game means finding that familiar visual somewhere within a big area. In this project the reference image plays the role of “Waldo” – and the objective is to find his AMPLIFiCAM position, so that any shift can be identified and fixed by examining the change of Waldo’s position over time.

Now we have an idea, but how to put it into action? We already have a name for this project: Waldo! At AMPLIFi we are zealous believers in lean execution, our product development philosophy is lean, simple, and effective. As a great engineer once said: "Make it work, make it better, make it perfect!"[2].

In “Where’s Waldo?”, I use a simple strategy to win. I break the scene into different sections. By going through each section, I eliminate the areas that do not include Waldo. Once Waldo is found within a section, it becomes easy to pinpoint his exact location.

We take the same principle in the Waldo application. To find him (the Reference Image), the arena is first divided into different sections, and the camera swivels across the whole stadium, moving section to section, and capturing images at every stop. Each image is then carefully examined by a computer vision engine that decides if Waldo exists in that image. Obviously, we will only stop once Waldo is found!

As any good player knows, the person who finds Waldo the fastest is the winner. This is just as important for AMPLIFi: in order to deliver the best experience, it would be nice to identify the shift and fix it as soon as possible – ideally, immediately after the shift occurs. If it needs to happen frequently, it better be fast.

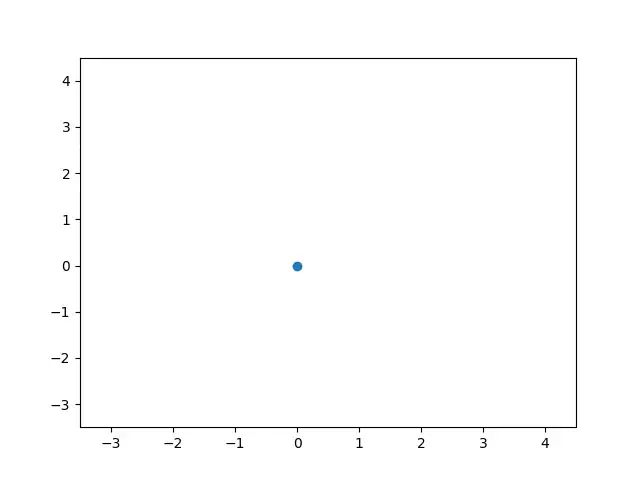

Since we already know where the reference position was last time, that’s where we start our search. The hope is that if the shift is small, it’ll be immediately caught, and the camera doesn’t need to do a lot of work to fix it. If the shift is bigger, we would follow our winning section-by-section strategy. Mathematically, the fastest way is to move in a spiral pattern[3], simply because it reduces the number of the jumps the motors will have to make.

In Waldo, as soon as the motors arrive at a position, the visual viewed by the camera is examined for matches to the Reference Image. To perform this image comparison, I picked the “Feature Matching” approach for Waldo.

Feature Matching has a descriptive name: extract the visual features from both images, and see if those features can be matched from one image to another. A match between two features, indicates how similar the features are. Each feature is located at a pixel in the image, or a keypoint. Keypoints and features are identified by an extractor, and they are then matched by a matcher.

There are several algorithms available for both extractor and the matcher. Some of them are popular for being fast, while others are known to be more accurate. We tend to choose Waldo as a feature rich visual – a mix of letters, shapes and logos. So, for this project, I selected a fast extractor in combination with an accurate matcher. This means that we are quick to identify image features, but are careful when determining the correctness of all the matches between the features of two images.

For a software engineer, it is probably impossible to write these many words without showing any code. At least for us here at Amplifi, it is always better to know what you want to do before doing it!

So let’s dig in! The motors are controlled by a serial interface. The camera takes pictures by using gphoto2. The computer vision algorithms, are all implemented in OpenCV. We use the ORB (Oriented FAST and Rotated BRIEF) feature extractor, and a FLANN implementation of k-Nearest Neighbour matching, with k=2. That means we find 2 matches for each feature of Waldo, and through a ratio test[4], we filter out the wrongly matched features.

The code for all of that is actually quite fancy and elegant. But my favourite part playing Waldo, was implementing the spiral movement. I like this piece since the math behind it is clever and simple. Here is a Python implementation:

def create_spiral(N):

'''

Create a list of direction changes, in a spiral fashion, in a N-by-N grid.

Directions:

Up: (0, +1), Down: (0, -1)

Left: (-1, 0), Right: (+1, 0)

'''

spiral = []

# startig point

x = 0

y = 0

# start the spiral by a downward move

Dx = 0

Dy = -1

for i in range(N * N):

# make sure we are inside the grid

if (-N/2 < x) and (x <= N/2) and (-N/2 < y) and (y <= N/2):

spiral.append((x, y))

# do we need to change directions?

if (x == y) or (x < 0 and x == -y) or (x > 0 and x == 1-y):

# to keep it a spiral, we need a 90 deg rotation

Dt = Dx

Dx = -Dy

Dy = Dt

# move to the next point

x += Dx

y += Dy

return spiral

My personal programming style is to explain the code with comments. We enforce this habit during our code reviews. The number of “Added some comments” commit messages is far too high in the repos! For better illustration, this is a spiral pattern for N = 8:

In the above figure, each point corresponds to the center of a section that motors move to, and at each position an image is captured, to then be matched with Waldo. The spiral movement would stop as soon as a match is found, and the stop position would be the new Reference Position. In other words, the shift is calculated by the difference between the motor steps of the current Waldo position, and his previously recorded one.

Streamline your event by partnering with a single technology provider to enhance fan engagement, digital sponsorship, and crowd data analysis

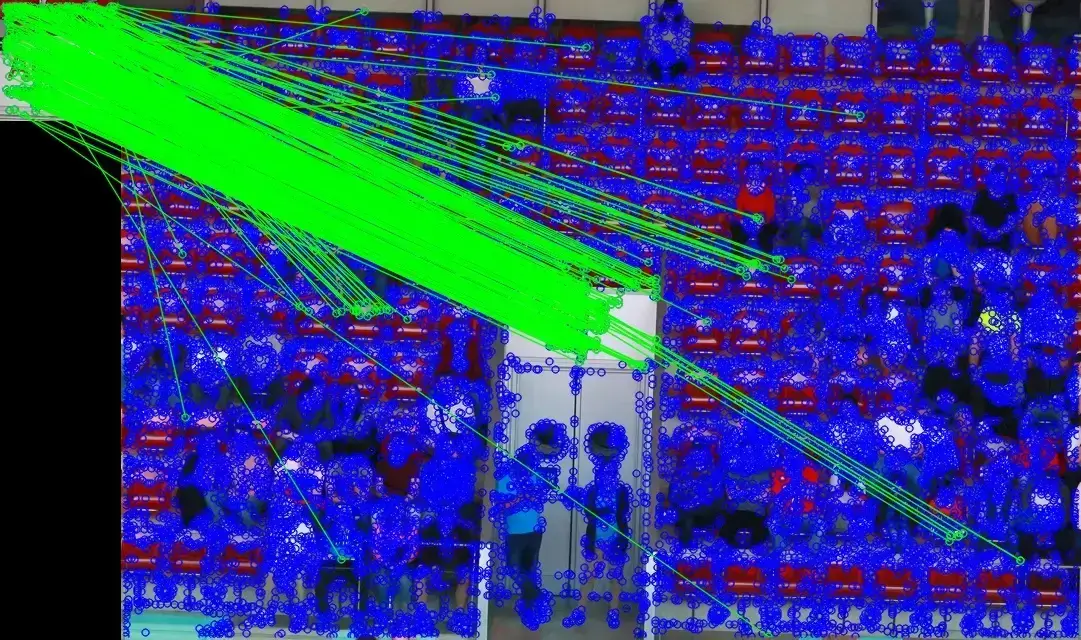

We have all the ingredients to make a first prototype of Waldo. My favourite prototyping language is Python, simply because of the minimal development time[5]. For a proof of concept, the first prototype worked very well, as showcased in the pictures below[6]. Upon AMPLIFiCAM installation, Waldo was captured at a Reference Position. In this case, after a few weeks, a slight shift was visible. The Waldo program successfully identified this shift (4 pixels in width, 7 pixels in height), and reported back the corresponding adjustment of motor steps.

The preset Reference Image. Waldo is the “A03″ sign at the centre of the shot

What camera sees at the Reference Position – there is a slight shift.

There are 282 quality match points between the Waldo and the shifted view of the camera. This resulted in detecting a pixel shift of (-4, +7) between the center of two images.

Although the first prototype performed exceptionally, there were a few issues we bumped into.

The Waldo program needs to run fast. Despite using the spiral search and the ORB extractor, the Python implementation was simply too slow. I had to rewrite the program in C++ to get it fast enough for our specifications.

In several cases, the Waldo program found a match for Waldo in the scene, but at manual inspection, no similarity between the two images could be seen. One key method to decrease the false alarm rate is to tweak the Ratio Test. With some trial and error, I found that tightening the ratio improves Waldo. Currently the ratio is set to 80%[7].

Waldo would sometimes successfully report a pixel shift, but with low accuracy. This happened when the number of symmetrical matches that passed the ratio test was a bit low (under 10 matches). Adding a lower bound for the number of found matches resolved this problem immediately.

Waldo has been a great addition to our line of software, simply because it automates the solution to a sneaky problem. I like to think of Waldo as a wholesome example of our engineering process at Amplifi. It was created based on a very lean strategy – we only invested in developing a full fleshed product once the proof of concept succeeded in meeting the requirements. This process both minimizes the effort and maximizes the productivity of all engineers. Although I had ownership over Waldo, creating it was definitely a team effort.

This was just a snapshot of the kind of challenges we tackle at Amplifi, and I hope you got as excited about this as I was. If this is something you like, consider joining our team, Amplifi can be your new home to innovate, experiment and build.

[1] In a big venue (like a football stadium), our cameras would be around 200 meters away from the stands, and the level of accuracy we aim for is half the width of one seat, or 0.1 degrees error tolerance in motor positioning.

[2] I said that.

[3] Technically, a logarithmic spiral is the fastest pattern. In Waldo we use a linear spiral: equally distanced concentric circles from the starting point.

[4] The match is high quality only if the distance ratio between the first and second matches (obtained by 2-NN Matcher) is small enough (the ratio threshold is usually near 0.5). Ratio test was first introduced by DG Lowe in this paper.

[5] I had to use ctypes in order to interface libgphoto2 in Python. OpenCV has official Python bindings. I used the pyserial library to interface the serial ports.

[6] Pictures obtained at Estadio Manolo Santana, located in Madrid, Spain

[7] This value was found merely from trial and error. For future, I wish to use a machine learning algorithm to “learn” the best ratio for a set of scene images.